This wiki article contains incomplete and informal notes about the MPS, the precursor to more formal documentation. Not confidential. Readership: MPS users and developers.

Here's the story of how it happens in the MPS, since the pool-independent chain mechanism was put in about 2001-03.

(Note that the MPS pool-independent chain mechanism is an early (and incomplete) design and implementation. It replaced both the earlier pool-action model, and the AMC-only generation mechanism. Chains could be generalized to a directed acyclic graph in the future, perhaps.)

This story concentrates on AMC pools in a VM arena.

Why do we need a story? Imagine a car dashboard: it has a speedo, a rev-counter, a temperature gauge, and fuel gauge. All this is only helpful if the user has some idea — some simplified story — of what is going on under the bonnet. For a car dashboard, the accompanying story might be this:

"The car's engine can turn round quickly or slowly: the rev-counter shows you how fast it's turning right now. The engine uses up fuel: see the fuel gauge. When the fuel runs out the engine will stop. The faster the engine's turning, the faster it's using fuel (to first approximation). The engine starts cold and should become warm and stay there: see the temperature gauge. If it doesn't there's a problem: stop and get a mechanic. The speedo shows how fast the wheels are turning, in other words how fast the car is going. The only connection between wheels and engine is the gearbox. If you are in neutral the speedo may drop to zero, and that's fine."

The approximations / simplifications / lies we put into the gauges need to match up with the approximations / simplifications / lies in the story. We don't want the rev-counter to be labelled "cam-shaft angular momentum".

The document is the story of:

This story is too complicated for users. I aim to produce a simplified version for MPS users, when I have a better idea of what simplifications are appropriate.

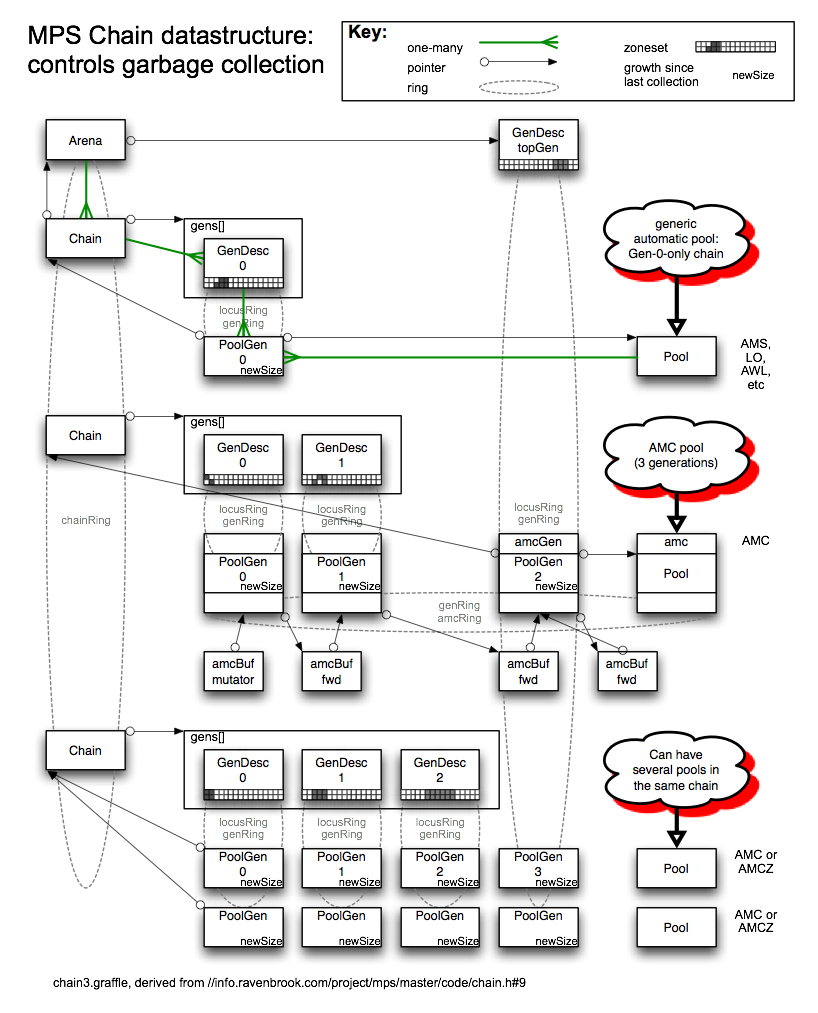

MPS garbage collection is controlled by the Chain datastructure, and by the concept of Zones. A Zone is a stripe of memory. The MPS typically divides the address space into 32 zones.

Refer to the middle chain "AMC pool (3 generations)" in the diagram above. Say the mutator wants three generations: a 100 KB nursery (Gen 0), a 200 KB intermediate generation (Gen 1), and the rest of memory for older stuff (Gen 2). Mutator creates an array of *two* mps_gen_param_s structs:

Mutator passes this array to mps_chain_create, and then uses the chain to create a new AMC pool.

The Chain contains an array of two GenDescs: numbers 0 and 1. The AMC pool creates *three* PoolGens:

What does "generation number" mean? Beware: three different (and possibly contradictory) things:

(The GenDesc itself, which has size and mortality and is perhaps the place you'd expect, does not store a generation number).

Okay, so what does "generation" really mean? Is it the set of size limits that trigger a collection, or the set of things that are collected in response to that trigger? This will be answered in full below, but the summary is:

PoolGen nr and GenDesc index are local to a Chain and relate to the first concept: triggering. PoolGen nr = GenDesc index, except that AMC top PoolGens have no corresponding GenDesc index: take care not to read past the end of the gens[] array.

SegPrefGen-number embodies the second concept: an arena-wide generation number that tends to determine which objects are collected at the same time. For AMC, SegPrefGen-number = PoolGen nr. For non-generational pools SegPrefGen-number is hardwired, or unset.

The PoolGen newSizes are zero initially. The GenDesc zonesets are empty initially.

As the mutator allocates, and as minor collections promote and preserve objects, each "generation" keeps track of its size and location. For each new segment that AMCBufferFill gets:

When AMCBufferFill calls SegAlloc() to ask for new memory segments, it passes the PoolGen's "nr" generation number (0 for mutator allocation in the nursery, 1 or 2 etc when preserving objects) as the SegPrefGen segment-placement preference.

ArenaVM tries hard to keep all segments for a given SegPrefGen-number together in the same zone or zones, and separate from the zones used for various other things. (Such as: zones used by other generation numbers, blacklist zones, and as-yet unused zones).

So hopefully the zoneset for a "nr" generation number will be disjoint from other uses of memory. [Note: if a zoneset gets polluted because of address-space pressure, there's currently no way to 'heal' it again afterwards. That's not good enough for a long-running client. See also the "barge" flag in arenavm's pagesFindFreeWithSegPref(). RHSK 2006-12-04]

All collections start from ArenaStep(). There are two routes into ArenaStep: an explicit call to mps_arena_step(), or an implicit one from the 'time-stealing' ArenaPolls in mps_alloc, mps_reserve, and mps_alloc_pattern_end/reset.

There are three trigger conditions:

Firstly, lots of "spare time". An MPS client's explicit call to mps_arena_step() can say "I've got some spare time" by passing a non-zero interval and multiplier. If (interval x multiplier) is big enough, and it's been long enough since the last one, start a full collection.

[Note: There are many hardwired constants in this trigger. And in fact it may have problems. For example: it tries to have its full collections running for 10% of mps_clock() (ie. wall clock) time. It estimates T, and checks whether 10 T has elapsed since the last full collection it triggered. If committed memory is growing, then each time the trigger checks T will be a bit larger, so 10 T might be receding further into the future, not getting closer. A bit like saying "I tidied my desk 7 days ago, and it will take 1 day to tidy, so I'll wait 3 more days". When the 3 days are up, more papers have accumulated, so you estimate that tidying will take 1.5 days... RHSK 2006-12-06]

Secondly (when ArenaStep calls TracePoll) the infamous "dynamic criterion" is assessed. The MPS needs quite a lot of memory to do a full collection. Memory is being gobbled up by client allocation, and if the MPS waits too long it could get completely 'wedged' or 'chock-a-block', with insufficient free space to do a full collection. It must start a full collection soon enough that we don't completely run out of memory in the middle of doing it. In fact, the MPS should start the full collection even earlier than that, so that the collection can progress at a gentle incremental pace, in parallel with ongoing client allocation. Calculating this criterion is tricky, but I think the idea is:

[Note: ArenaAvail may not be meaningful, especially for a VM arena. Someone more familiar with ArenaReserved() should check. But I note here its major effect on the "dynamic criterion" trigger. RHSK 2006-12-06]

In both cases, the decision about whether a full collection is due is based on total committed memory (ArenaCommitted - ArenaSpareCommitted), so allocation in any pool (even a manual one) can contribute to triggering a full collection. Both these full-collection triggers call traceStartCollectAll().

Thirdly, if no full collection is triggered, look for a chain with a full Gen 0: where the sum of the chain's PoolGen 0 newSizes exceeds GenDesc 0 capacity. If there's a choice, pick the chain whose Gen 0 is most over capacity. The most over-capacity chain will start a minor collection, by calling ChainCondemnAuto(). Because the decision is based on PoolGen 0 newSize, automatic pools only trigger a minor collection if they are incrementing the newSize in their PoolGen 0, and their GenDesc 0 capacity is set to an achieveable value.

"newSize" means how much has accumulated since the last time this generation was condemned; it does not count objects that survived-in-place at the last collection. TotalSize = previousSurvivorsSize + newSize. AMC usually preserves by promotion to the next generation, so previousSurvivorsSize is small. Other pools (such as AMS) preserve in place, so previousSurvivorsSize tends to build up. If we assume that most previous survivors will survive again, then (newSize x mortality) gives an estimate of how much garbage we might expect to reclaim.

traceStartCollectAll() finds all chains, all the PoolGens in Gen 0 of those chains, all the pools those PoolGens are part of, all the segments of those pools, and condemns all those segments:

traceStartCollectAll():

traceCondemnAll()

for chain in all chains:

ChainCondemnAll(chain)

ChainCondemnAll(chain):

for PoolGen in GenDesc 0 of chain:

for Seg in (PoolGen->pool)->SegRing:

TraceWhiten(Seg)

So all automatic (garbage collected) pools must have a chain (even if they aren't generational) or their objects won't get condemned.

By the way: MRG pools are scanned, but not collected: in other words they are manual (hence the "M" in their name). They do not have a chain.

The first step is to find the list of adjacent GenDescs that are over-capacity in this chain only: Gen 0 (the nursery), plus any adjacent higher generations that are also over-capacity. (So if gens 0, 1, and 3 are over-capacity, but 2 is not over-capacity yet, then a minor collection will condemn 0 and 1, but not 2 or 3).

The second step is not obvious: these GenDescs have been recording the zoneset of all the segments ever added into that GenDesc (as long as the pool noted it by calling PoolGenUpdateZones). ChainCondemnAuto() calls TraceCondemnZones() to condemn the full zoneset ever touched by a segment in any of the condemned GenDescs.

Why condemn the whole zoneset? Well, minor collections rely on remembered sets to work well, and the MPS implements remembered sets by recording the zone summary of references in a segment. We hope that the references that will keep the survivors alive are concentrated in only a few older-generation pages, which we can cheaply find using their zone summaries. Because of this, if the nursery we are trying to collect lives in zoneset 23 (say), we may as well collect everything in zoneset 23 at the same time, even if it also contains objects from a different chain.

So the major determiner of which objects will get collected at the same time is what SegPrefGen-number their pool passes in its call SegAlloc() when allocating a new segment. The generational AMC pool takes this number from the PoolGen's "nr" field. Some other pools hardwire it (AWLGen = 1, LOGen = 1). Some do not set it (AMS).

To condemn the zoneset, TraceCondemnZones() uses the SegFirst/SegNext() iterator, and for every segment that is wholly within the condemned zones, it calls TraceAddWhite(seg).

Full collections are easy: all pools can trigger a full collection (see "Triggering" above). Automatic pools must have a chain with a GenDesc 0 PoolGen, so that their objects get condemned by a full collection (see "Full collection" above).

Minor collections are trickier. They are triggered by an over-capacity Gen 0. Automatic pools only trigger a minor collection if they are incrementing the newSize in PoolGen 0, and the GenDesc 0 capacity is set to an achieveable value.

A minor collection condemns a set of zones. To control which minor collections it participates in (has its dead objects collected by), an automatic pool should make sure its segments are in suitable zones, such as by calling SegAlloc with a SegPrefGen-number that matches one used by a pool that is going to trigger minor collections (such as AMC).

Here's what the actual AMS, LO, and AWL pools do currently:

AMS pools have a Gen-0-only chain, so AMS objects are collected by full collections. Allocating AMS objects increments the PoolGen 0 newSize, and AMS Gen 0 capacity is set by the client: the capacity can be tuned to make AMS allocation trigger a minor collection at a suitable time. AMS-triggered minor collections will condemn all AMS objects. AMS does not specify a SegPrefGen-number, so arenavm allocates segments in the first non-blacklisted zone with enough space. This means an AMS-triggered minor collection (which condemns all AMS objects) will condemn a 'random' subset of zones used for all automatic pools, which could include a random selection of parts of AMC generations. Conversely, AMC's generationally-structured minor collections will also collect random subsets of AMS objects.

LO and AWL pools do not take a chain argument, but they have a 'hidden' Gen-0-only chain, so their objects are collected by full collections. The hidden chains have hardwired Gen 0 capacity. AWL's Gen 0 capacity is hardwired to SizeMAX KB, so AWL objects will never trigger a minor collection. LO's Gen 0 capacity is hardwired to 1024 KB, and it increments PoolGen 0 newSize, so each 1 MB of new LO allocation will trigger a minor collection. Both AWL and LO segment-placement preference is hardwired to SegPrefGen-number 1 (note inconsistency with the PoolGen "nr"!). LO-triggered minor collections will tend to also condemn some or all AMC-generation-1 objects (but no AMC nursery objects), some or all AWL objects, and some AMS objects. Conversely, AMC-triggered minor collections that include AMC-generation-1 will tend to also condemn some or all LO and AWL objects, and some AMS objects.

[To summarise this section: the behaviour is complex, hard to predict, and may not have been designed to be like this. AMS not specifying a SegPrefGen, and arenavm treating that as "anywhere but blacklist", is probably a bug, especially now there are client-tunable AMS triggered minor collections. This whole area should probably be looked at. RHSK 2006-12-06].

The MPS can regulate its progress by comparison to:

Amount of allocation is the traditional way for the MPS to measure time. MPS source code often calls such an amount a "time" when it is used for rate-control purposes, even though the units are bytes (or sometimes KiB = 1024 bytes). Amount can be total, or some subclass such as just mutator-allocated objects, or just objects in one particular pool or generation. The amount can be everything since arena-creation, or just the delta since some event such as the last collection (of a specified subclass of objects). The amount can be of object bytes only, or can be rounded up to include MPS overheads such as alignment rounding and partly-filled segments. Sometimes the amount is incremented and decremented with approximate tallies, and a systematic error or drift could be introduced.

Regulation by mps_clock()-time is new, using the mps_clocks_per_sec() scale added for the "opportunism" feature (2003-02-17 change 39673 version 1.101; see timeline). It is only used when the mutator calls mps_arena_step() with a time interval.

The rate at which the collection is advanced is called the trace rate, and is given a fixed value by TraceStart(). The units are how many bytes of live objects to scan in each traceQuantum().

Determining the trace rate follows three logical steps:

For full collections, finishingTime (units: mutator allocated bytes) is chosen so that this much mutator allocation, plus a hardwired estimate of how much will survive the full collection (49%), all of which is assumed to be new allocation (preserved by copy, not preserved in place), will exactly exhaust ArenaAvail().

For minor collections, ChainCondemnAuto() carefully calculates a weighted overall mortality. The finishingTime (units: mutator allocated bytes) is hardwired proportional to the amount condemned by this collection.

[Why is it proportional to condemned? I don't know. Making it proportional to the amount to be scanned would 'steal' a more predictable fraction of time from the mutator, surely? We want to control the short term eagerness during a collection. (The long term eagerness is controlled by what triggers a GC and what is condemned, not by the trace->rate.... as long as long-term eagerness is lower than short-term eagerness, ie. as long as some of the time there is no collection happening at all). The short-term eagerness is surely about stealing no more than x% of time from mutator allocation, so should compare collector CPU cycles (ie. amount we scan) to mutator allocation CPU cycles (ie. fillMutatorSize). RHSK 2006-12-05]

Four things advance the collection:

How much work does each one do?

Barrier hits do the minimum possible: scan one unit of protection.

ArenaPoll: if the arena is not clamped and the mutator has allocated ArenaPollALLOCTIME bytes (or more) since the last time ArenaPoll did some work, it will do a minimal ArenaStep.

ArenaStep calls TracePoll once (minimal), or if given a time interval repeatedly until the time has elapsed.

TracePoll may start a new collection. If there is a collection, it will do exactly one traceQuantum().

traceQuantum() scans trace->rate bytes of live objects. (Actually it always scans a whole segment at a time, and even if it scans too much this time it will still scan at least trace->rate bytes next time too).

mps_arena_park will loop doing traceQuantums until the collection is complete.

Reclaim only happens at the end of collection. Hopefully lots of segments will be white (dead), and will be released, shrinking ArenaCommitted.

[Future: Indeed, in some sense, reclaim can only happen at the end of collection. A large collection C could be subdivided into smaller collections C1 and C2, and perhaps C1 might finish earlier. But choosing how to subdivide is a combinatorial problem, unless the mutator can give us a clue.]

2006-11-30 RHSK Created after much research, incomplete. 2006-12-01 RHSK What triggers a GC? 2006-12-04 RHSK What triggers a GC: clarify and expand, add diagram 2006-12-05 RHSK AMS, LO, AWL. Rate. What advances a collection? 2006-12-06 RHSK correct dynamic criterion, newSize, trace rate 2006-12-06 RHSK Purpose. Generation can mean 3 things. 2006-12-06 RHSK Notes on problem areas. Reclaim. Simplify diagram. 2006-12-07 RHSK What does generation really mean?

This document is copyright © 2006 Ravenbrook Limited. All rights reserved. This is an open source license. Contact Ravenbrook for commercial licensing options.

Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met:

This software is provided by the copyright holders and contributors "as is" and any express or implied warranties, including, but not limited to, the implied warranties of merchantability, fitness for a particular purpose, or non-infringement, are disclaimed. In no event shall the copyright holders and contributors be liable for any direct, indirect, incidental, special, exemplary, or consequential damages (including, but not limited to, procurement of substitute goods or services; loss of use, data, or profits; or business interruption) however caused and on any theory of liability, whether in contract, strict liability, or tort (including negligence or otherwise) arising in any way out of the use of this software, even if advised of the possibility of such damage.